I’ve been using Chorus for the past 6-7 months. Within the first couple days of using it, I was telling everyone I talk with about AI stuff to try it out. Melty Labs, the company behind Chorus, subsequently built Conductor. It appears this Conductor now their primary focus, and as such they’ve decided to open source Chorus.

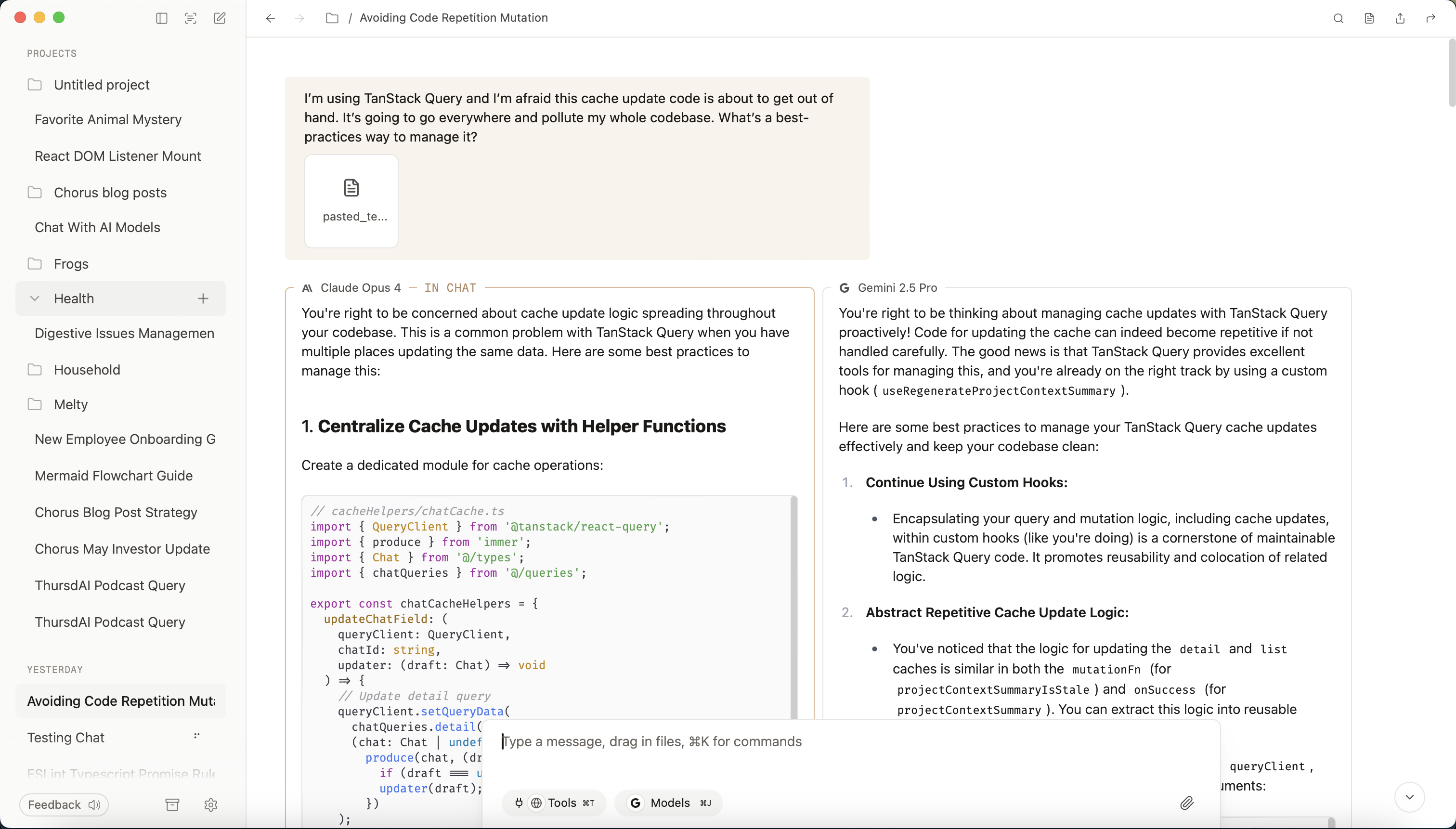

Chorus is a macOS LLM client. Its differentiating feature is that it fetches you responses from many LLMs in parallel within the same chat. I think it’s quite good software.

Chorus screenshotSource

It is snappy. I used to be impressed by the responsiveness of the ChatGPT app, but after having become a regular Chorus user, I can’t use either the ChatGPT1 or Claude macOS apps without feeling like they’re just sluggish in comparison.

It has a small surface area, but executes the UX well. The key UX innovation of Chorus is its multi-model chat – you can get responses from multiple models, pick the one you want to thread into the conversation, and continue. There are also many nice affordances in Chorus that don’t exist in other clients. For example, chats allow for “inline replies” where you can ask a model about a response without adding that response to the context window. Chorus also handles pasted content much better than ChatGPT desktop or the Gemini AI Studio. (Claude handles pasted stuff pretty well.) Chorus just feels like a tool – as opposed to a consumer app – in a way that other clients don’t.

It puts the user in control more-so than any of the first-party clients. Chat branching and “previous messaging” editing worked in Chorus well before ChatGPT and Claude.2 Using a third-party client like Chorus also lets you jettison the opinionated system prompts that the first-party clients inject, and also let you switch quickly between system prompts. The first-party system prompts are good in a consumer app, but Chorus makes for a better “training wheels off” LLM client.

The multi-model native chat makes it easier to build an intuitive sense of models. Interacting with Claude/Gemini/GPT-N within the same dialog gives you a much more intuitive sense of the “shape” of each of the models. It’s also quite interesting to see, say, Claude Sonnet continuing from a Gemini response, since their inherent writing styles are quite different.

It allows you to burn tokens. First-party chat clients are mostly on a flat, monthly subscription model. The incentives are to provide you with a good experience so you don’t cancel, while otherwise minimizing the number of tokens you use. You pay for all your Chorus tokens. So, want to generate a response from Opus 4.5 and GPT-5.1 and Sonnet 4.5 and Gemini Pro 3 for each conversation turn? Go for it. Often times, many fanned-out responses to a message will be more useful than the “best” model’s response. It’s effectively an easy way to do “LLM councils” or best-of-N.3

It’s very clearly not trying to get your data. Chorus originally shipped with an optional subscription model that was essentially a model proxy. However, it was always possible to use your own API keys and their privacy policy was refreshingly clear: “We don’t look at your chats, and we don’t want to.” Now that Chorus is open-sourced, you can verify that.

We’re still quite early in figuring out what UX patterns work well for LLMs. Chorus took an interesting idea, executed it well, and as a result has a really great novel UX that none of the other clients nail. There are obviously many use cases for LLMs. The spotlight now is on agentic harnesses like Claude Code, Codex, Cursor, and the like. Chat interfaces are not solved! Assuredly there is more space to explore the “calculator for words” idea space.

I wish Charlie and team the best of luck on Conductor, and thank them for making Chorus open source. 🎉 I’m looking forward to contributing & hacking on Chorus.

-

The macOS app for ChatGPT feels noticeably sluggish now. I think this regression started around the GPT-5 release, if memory serves. My mental ranking used to be that the native macOS ChatGPT app was ~2x faster than Claude. Now, that’s been reversed. ↩︎

-

Though I will say that at this point, the ChatGPT/Claude desktop apps have largely caught up. ↩︎

-

Well, where N = “number of models”, not “number of responses”. I think Chorus could add support for multiple generations from the same model, but that doesn’t exist today. ↩︎