It finally seems like we’re nearing the end of the acute phase of the pandemic in the US. 🙂 Vaccines are now available to all adults, which is months ahead of what I was expecting earlier this year. There are still reasons to be concerned about the global state of the pandemic, but at least locally I’m optimistic.

At work, our offices have begun to reopen at limited capacity. I haven’t made the trek back in yet, but I’ve had meetings with coworkers who have gone back to the office part time. It’s kinda strange to see folks in meeting rooms again, but it’s a nice glimpse at the post-pandemic future.

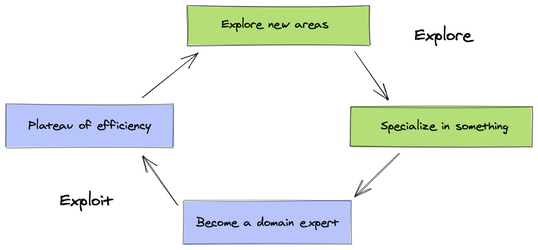

On an unrelated note, I’ve also been thinking a lot recently about learning, career progression, and effectiveness. At the end of this post, I have some links to relevant articles about this as well. Generally, I’m starting to feel like I’m nearing the end of the Plateau of Efficiency phase in the Explore/Exploit cycle:

While it’s comfortable (and quite fun!) to be in the stable phase of productive efficiency, after being there for a while, the “plateau” aspect begins to become troublesome. You realize you’re not learning as much as you were in the Explore mode, and the types of problems you’re efficient in solving start to look “samey”.

I like using this mental Explore/Exploit model because there’s plenty of variables to consider, which raise interesting situation-dependent questions: How wide of a net do you cast in Exploring New Areas? Going back into the Explore mode can be a minor tactical realignment, or a complete strategic shift in what you focus on. How long do you stay in the productive Plateau of Efficiency? Too short, and you don’t fully exploit your investment in ramp-up time and skill acquisition; too long, and you miss out on other learning opportunities, or get stuck in local maxima. How “big” of an area do you choose to specialize in? As you build up longer-term meta skills, the size/importance/impact of the domain you specialize in can increase.

So, the natural next phase after Productive Efficiency is to begin to explore new areas. I’ve sufficiently convinced myself that I’ve been in the Exploit phase for long enough, but now the decision is how big of a realignment to do in going back to Exploring.

Anyways, my conclusions in this domain are still very TBD.

Recent Reading

Inadequate Equilibria by Eliezer Yudkowsky (3/5):

I first heard of Yudkowsky from this 2018 interview with Sam Harris and was interested in some of the ideas he had around AI alignment and existential risk. This book is not about that. This book is about markets, the role of experts, and how civilizations get “stuck” in ruts of bad incentives.

The book assumes a pretty strong familiarity with game theory and contemporary rationalism. If you have that background, then Inadequate Equilibria is full of concise mental models of where and how civilizations can “fail” to produce the outcomes we want. Without that background, I think it would be challenging to get much use out of this book, other than some entertaining stories about how the author treated his wife’s seasonal depression with an absurd number of high-output LED lightbulbs (yes, really).

Yudkowsky explores a couple genres of civilization-scale failure, mostly in relation to the failure of experts and the failure of markets. Examples include: why education remains expensive, why our healthcare system frequently misses “low hanging fruit” therapeutic techniques, and why “listening to experts” fails when experts don’t have sufficient incentives to fix issues in their domain.

In Yudkowsky’s view, the solution to some of these problems – at least for the individual – is to determine when it is appropriate to take the “outside view” (i.e. trust conventional wisdom) or the “inside view” (i.e. use your own personal rationality to determine a course of action, even when this goes against “expert advise”).

In general, knowing when to take the “inside view” is Really Hard. Most people feel like they’re above average drivers, which is impossible. So, unless you have special evidence that you are an above average driver, you should probably take the “outside view” that you’re about average at driving, and not take any abnormal risks on the road. But, sometimes you actually do have some special knowledge or skills that give you more insight into a problem than the average person.

One notable way that this pops up is Gell-Mann Amnesia, which is when you read some piece of reporting on a subject that you know very well, identify myriad ways in which the reporting is wrong or misses key bits of context, and feel indignant about how misreported the issue is. Then, you go on to read other reporting on other topics you know less about, and trust the reporting as accurate, even though you spotted errors in the domain you know a lot about.

While there are some useful techniques at the end of Inadequate Equilibria which begin to create an “inside/outside view” decision framework, some of this ended up feeling like “surely, anyone reading this book is smarter than the average person, by dint of deciding to read a book about rationality at all, so you should probably apply the inside view more often”. Fair enough, but this left me a bit unsatisfied.

While I found Inadequate Equilibria a worthwhile read, I’d previously read Scott Alexander’s excellent longform book review, and didn’t really get much more out of reading the actual book than the review. To be a bit blunt, this is one of those nonfiction books that has some really interesting ideas, but most of them are in the first and last quarters of the book, and you can skip the middle half without missing much other than anecdotes.

Nevertheless, it’s a pretty short read, so I’d still recommend it.

The Hidden Girl and Other Stories by Ken Liu (4/5):

The Hidden Girl is a collection of short stories about AI, consciousness, environmentalism, VR, blockchain, and other such popular topics in recent “near future scifi”.

I particularly enjoyed Byzantine Empathy, a story about an “empathy-backed” cryptocurrency used to divert funds to social causes, and The Gods Will Not Be Chained, the first in a sequence of stores about consciousness uploading and hyperintelligent AI.

Liu is great at constructing compact, self-consistent worlds within just a few pages. The Hidden Girl is well curated, and navigates topics that are currently in the “inflated expectations” phase of the hype cycle, without falling into “Black Mirror”-esque cynicism or utopian optimism.

Rust/Z3 Portfolio Tool

One of the boring “adulting” chores I do every month is put some money in index funds for long-term investing. I follow a pretty simple three fund portfolio, which means that as I buy shares, I’d ideally end up with an amount of each fund that roughly aligns with the proportion I assign each fund in my portfolio.

This isn’t a particularly difficult task, but calculating the optimal number of shares to purchase to (1) get as close as possible to the total amount of money I want to invest each month and (2) results in the portfolio staying balanced is nontrivial. I’d basically “guess and check” until I got something close – it’s easy to get “close enough” with basic arithmetic, but each fund has a different price, so going from “close enough” to “optimal” takes some tinkering. My intuition is that this problem is pretty close to a Knapsack problem, but I haven’t thought about it enough to be sure.

Anyways. I automated away the problem using Microsoft Research’s Z3 optimization engine. In broad strokes, I constructed a model that took in the portfolio fund allocation, the existing shares in the portfolio, and a target purchase total, and outputs the optimal number of shares of each fund to buy to rebalance.

Z3 is an incredibly powerful tool, and allows you to write expressive models like this without really caring how the engine evaluates the solution. Using Z3 kinda feels like using Prolog, in that you describe a problem declaratively and the computer just magically solves it:

# Example Z3 optimization problem

o = Optimize()

x, y = Ints('x y')

o.add(x > 10)

o.add(y < 100)

o.add(5*x == y)

o.maximize(x + 2*y)

o.check()

print(o.model) # [x = 19, y = 95]

I wrote a small prototype using the quite ergonomic Z3 Python library, and then built a slightly more robust version as a Rust CLI. You can find it on my Github.

Every time I use Z3, I’m struck by how useful it is – I think there are likely many types of interesting problems that are reducible to a representation that Z3 can just solve for you.

Assorted Links

On Work

- Difficult Problems and Hard Work

-

The distinction is roughly that something is hard work if you have to put a lot of time and effort into it and a difficult problem if you have to put a lot of skill or thinking into it. You can generally always succeed at something that is “merely” hard work if you can put in the time and effort, while your ability to solve a difficult problem is at least somewhat unpredictable. [Emphasis mine]

- Finding a balance between “hard work” and “difficult problems” is tricky. “Hard work” is satisfying in the short term, but can lead to complacency if you don’t also work on longer term “difficult problems”. But only working on “difficult problems” can burn you out, since you often lack visible incremental progress.

- The brilliant bit of this framework is that, as you learn, “difficult problems” turn in to “hard work”. You pick up skills and mental models that make you unconsciously competent at tasks which had previously required a lot of explicit effort. Therefore, if you notice that you’re doing mostly “hard work” in an area that used to be full of “difficult problems” , then you’ve probably hit a learning plateau. (This bit in particular is relevant to the Explore/Exploit framework I discussed earlier in this post.)

-

- Always be quitting

-

A good philosophy to live by at work is to “always be quitting”. No, don’t be constantly thinking of leaving your job. But act as if you might leave on short notice.

- I think this is related to increasing your team’s “bus factor”, but is also generally good advise in improving a team’s health. While it can be tempting to try to remain “important” by fighting fires independently and/or developing deep knowledge in niche topics, this article makes the argument that spreading knowledge out leads to a “rising all boats” effect. Broadly: avoid communication bottlenecks, document and share knowledge so it doesn’t leave with you, and decentralize decision making authority.

- It also probably lets you feel less bad about leaving a team (e.g. “I don’t want to leave my former team in a bad spot”) which, from a selfish perspective, is probably healthy for career growth.

-

- Know your “One Job” and do it first

-

When you are meeting expectations for your One Job — and you don’t necessarily have to be dazzling, just competent and predictable — then picking up other work is a sign of initiative and investment. But when you aren’t, you get no credit.

- Another good article about career growth. It can feel great to contribute to “extracurricular” activities at work (e.g. mentoring, 20% projects, etc.), but it’s important not to let those contributions occur at the expense of the core work on which you’re evaluated.

-

On Infrastructure

- How to make the bus better

- TL;DR: Many cities’ bus systems optimize for coverage (the amount of geographic area serviced by a transit system) over ridership (the number of people who use the system). This leads to wasteful spending on low-frequency, low-ridership lines at the expense of creating better transit corridors. US cities could improve their transit systems by optimizing for ridership, correctly spacing stops along routes, and changing land-use policies to encourage public transit over, say, driving your car and parking downtown.

- Also, while making public transit free sounds like a good progressive thing to do, it can often backfire and harm transit authorities because of the way public infrastructure receives funding. (Intuitively, if you found a big pile of money, it’d probably be better on net to use that money to improve transit quality than reduce fares.)

- I don’t get the high-speed rail thing (yet)

- TL;DR: High-Speed Rail sounds very cool, in that it’s shiny infrastructure that makes you feel like you’re living in the future. However, plopping down HSR in the US probably wouldn’t improve the liveability of cities much, because we still lag behind in local rail. (This is, uhh… definitely true in Seattle)

- Why has nuclear power been a flop?

- TL;DR: Safety regulation has paradoxically ensured that nuclear energy production stays at least as expensive as fossil fuels. Current regulations don’t have a realistic model of the actual risks of nuclear power production: we currently inaccurately model the risks of mild radiation incidents, peg the cost of safety measures to the price of other energy production means, and don’t have a framework for creating test reactors which could find new methods of reducing construction costs.

“Just Trust Me”

-

-

America has become a Microwave Economy. We’ve overwhelmingly used our wealth to make the world cheaper instead of more beautiful, more functional instead of more meaningful.

-

This is where I rebel against minimalism. Though it’s a worthy counterweight to the excess of 20th-century materialism, it undervalues how material goods can become an extension of our personality. Though we shouldn’t stockpile things, our souls are nurtured by items that reflect who we are, where we’ve been, and what we stand for.

- Great article. I got sucked into the minimalist mindset for a few years when I was in college. While I think there are some useful applications of minimalism, if it’s taken too far it becomes a destructive form of asceticism. It’s possible to “treat yourself” every once in a while without engaging in performative consumerism.

-

-

Cover: Unsplash